Run Large Language Models on Colab with TextGen-WebUI

This blog post will guide you through using a fantastic GitHub repository to effortlessly run Large Language Models (LLMs) on Google Colab with TextGen-WebUI.

Introduction

Tired of limited access to powerful LLMs? This repository by seyf1elislam offers a convenient solution! It provides Colab notebooks that allow you to run various LLMs with just a few clicks.

Repo Link : Run LLM - OneClickColab

Available Notebooks

The repository offers two notebooks:

- Run GGUF LLM models in TextGen-webui: This notebook lets you unleash the power of GGUF models on TextGen-WebUI. Here : Open in Github

.

- Run GPTQ and Exl2 LLM models in TextGen-webui: Explore GPTQ and Exl2 models through TextGen-WebUI with this notebook. Here : Open in Github

.

Getting Started

Here's how to get started:

-

Open the Colab Notebook: Click the "Open in Colab" button for the desired notebook (GGUF or GPTQ/Exl2).

- GGUF notebook (recommended ⭐)

- GPTQ/Exl2 notebook

-

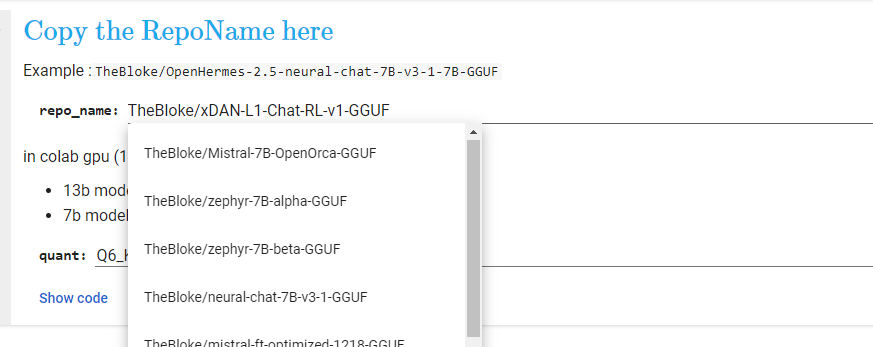

Choose Your Model: Select the LLM model you want to run from the list provided in the notebook.

-

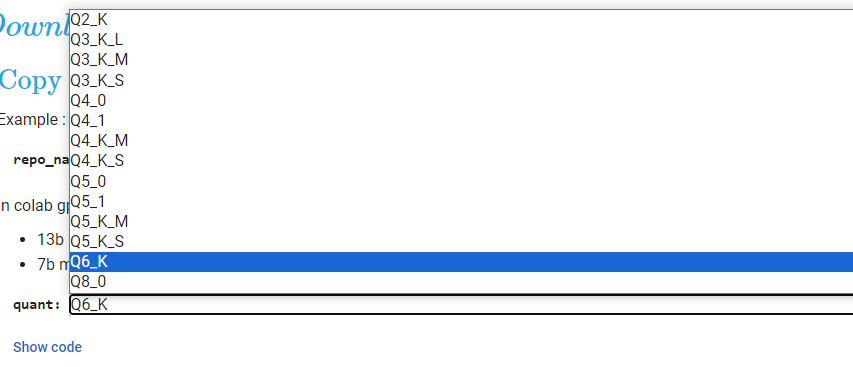

Select Quantization Type (if applicable): Choose the appropriate quantization type for your chosen model (if the notebook supports it).

-

Run the last Cell: Execute the designated cell in the notebook.

-

Visit the Generated Link: A link like

https://****.trycloudflare.com/will be displayed. Open this link in your browser. -

Start the Conversation: You're now ready to interact with your chosen LLM model through the TextGen-WebUI interface!

Quantized Models Sources

For optimal performance, consider using quantized models. You can find these on the Hugging Face repositories of:

- mradermacher (GGUF)

- bartowski (GGUF)

- LongStriker (exl2, GGUF)

- QuantFactory (GGUF)

You can also search for "GGUF" on Hugging Face to see all available models: https://huggingface.co/docs/hub/en/GGUF

Recommended Models

Here are some exciting models to try:

Latest (11/2024):

- Qwen/Qwen2.5-Coder-14B-Instruct-GGUF: This Q5_K_M / Q4_K_M model is highly recommended for coding (⭐⭐⭐⭐⭐⭐).

- Qwen/Qwen2.5-Coder-7B-Instruct-GGUF: This Q8_0 model is highly recommended for coding (⭐⭐⭐⭐⭐).

Others :

- QuantFactory/Mistral-Nemo-Instruct-2407-GGUF (12B): This Q5_K_M / Q4_K_M model is highly recommended (⭐⭐⭐⭐).

- bartowski/Mistral-Small-Instruct-2409-GGUF (22B): This model works well with 3KM in 15g vram (⭐).

- Meta-Llama Models (8B): Explore Meta-Llama-3.1-8B-Instruct-GGUF (Q8_0) and Meta-Llama-3-8B-Instruct-GGUF (Q8_0) (⭐⭐⭐⭐).

- bartowski/gemma-2-9b-it-GGUF (9B): This model offers Q8_0/Q6 quantization (⭐⭐⭐).

Requirements

There are no specific software requirements. Just open Colab in GPU mode, and the notebook will handle the necessary installations.

Some Tips

- Free Colab T4 GPU (15G vram) Recommendations:

- 22B model: Up to Q3_K_M (context up to ~8K)

- 12B model: Up to Q5_K_M (context up to 16K)

- 8B/7B model: Up to Q8_0 (context up to 16K, if supported)